After figuring out that the data warehouse’s hardware isn’t keeping up with the data we were collecting, the application team reached out to three groups: the vendor, our Windows engineers, and are storage engineers.

First Shop Visit: The Vendor

One thing you take for granted as a creator, whether its of cars, buildings or applications, is your ability to change your creation when something’s not working or not performing as well as you can. When you enter the world of COTS (commercial off-the-shelf software), you don’t have the option to change many features of what you purchased. You end up needing to go to the vendor for support. What they are allowed to do depends a lot on how they designed their software’s architecture, the cost of the modification and the impact to your other customers. You may discover that the software you get may not be as configurable as you expected and you have to invest a lot of capital to make things run better.

I had the opportunity to learn from SQL Server MCM Brent Ozar in Washington DC a couple years ago. Brent has a wonderful series on performance tuning when you can’t fix the queries. He highlights first and foremost that you have to identify what you’re allowed and not allowed to modify in your struggling application. That will dictate if your options include minor code and data model changes or a major capital spend to throw hardware at the performance problem.

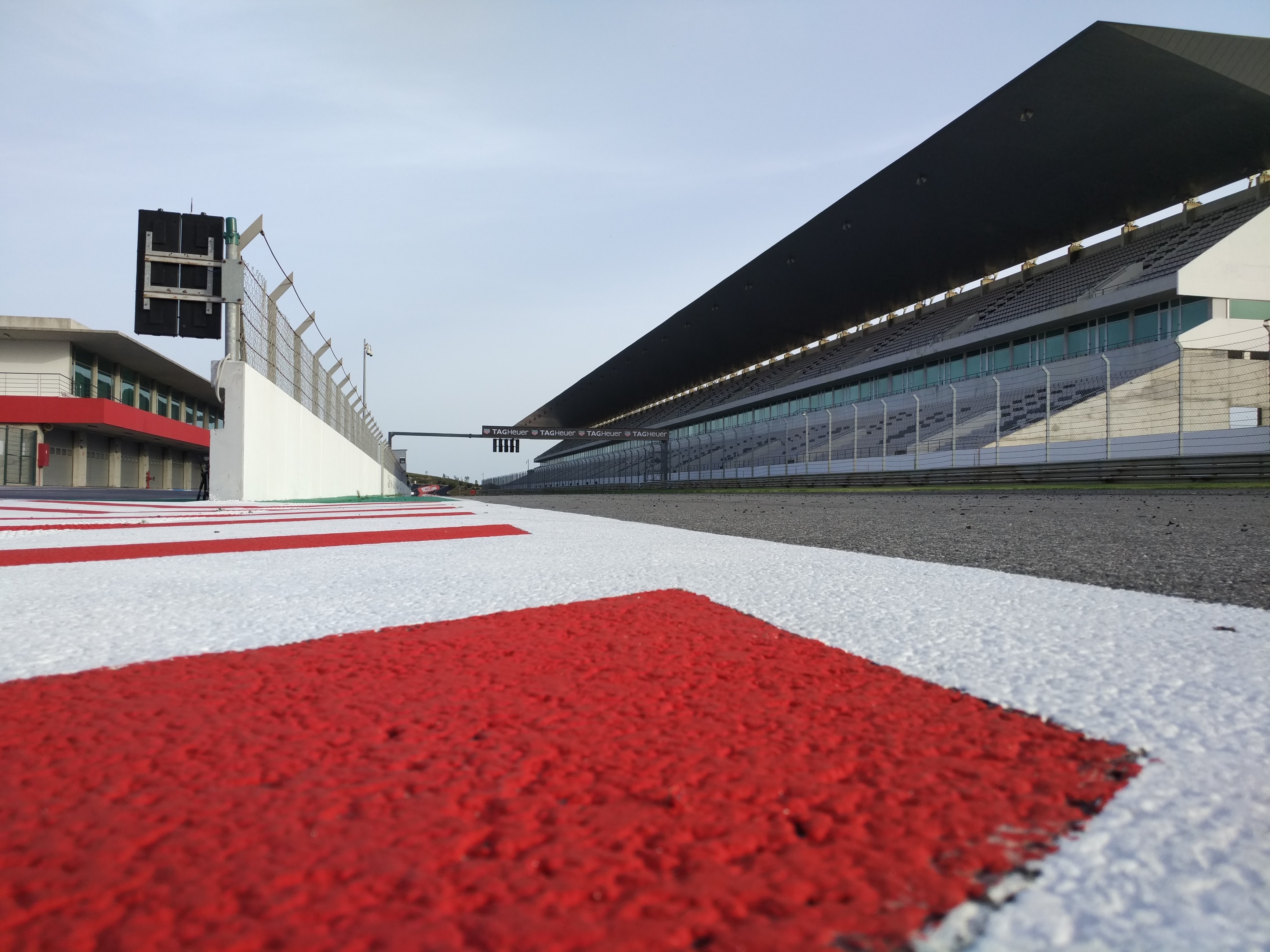

In the case of our hybrid data warehouse, like a car that’s not running very well, it was taken to the the shop. In this case, the app team reached out to the vendor about how the application was slowing down as more and more data was added from our systems. Unfortunately, customization of their code and model couldn’t be done as they were supporting other clients in the healthcare sector.

What they did recommend were tried-and-true workarounds, like pre-aggregating data with batch processes to prepare critical reports ahead of time, reducing maintenance windows, and offloading costly functions like DBCC checks to other servers.

A later release of their product incorporated peer-to-peer replication, allowing two or more servers to handle ETL and reporting functions jointly. But with all replication, introduction of new elements to the schema has to be done precisely otherwise you’ll be rebuilding from a snapshot at 3am. After a couple of harrowing attempts fixing replication, it was easier to compromise and update one server weekly for R&D reporting, leaving the primary ETL server for operational reports.

The Second Shop Visit: The Engineers

After exhausting all opportunities to create to pre-aggregate data and scale out to additional servers, the SAN admin team made an announcement: we’re going to solid-state drives.

Once again, we got really interested. We all heard the promise of going to drives offering 300,000 IOPS. Compared to the rotational SAN with each spindle giving a hundred IOPS and at most getting 15,000 IOPS in an array, 300,000 IOPS was like a dream come true. Performance should be linear, right? We should see things running 15 to 20 times faster maybe. What are we waiting for?

So, we took the plunge. We coordinated downtime, moved 20 LUNs of data from the rotational SAN to three LUNs of solid-state SAN. We powered up SQL, kicked off our jobs and waited for the magic to happen…

Overall performance improvement: 14%.

Things are looking grim now. You spend six figures on new SSD storage and only get a 14% improvement. What went wrong? What did we miss…?

Leave A Comment